Case Study: Automating Email-to-Order Processing in the Healthcare Industry

Transform emails from customers into complex enterprise workflows like filling out orders with generative AI.

Overview

A leading company in the healthcare industry processes millions of order emails per year, each containing multiple product requests. Their SKU database contains over 200,000 entries, with varying formats – some products include structured parameters, while others rely solely on descriptions.

Complicating matters, order requests arrive in unstructured formats, including:

Emails with free-text product requests.

Attachments in PDF, CSV, and image formats, including scanned faxes and handwritten notes from doctors.

Documents that may be rotated, upside-down, or of varying quality.

Customer Service (CS) agents were manually reading these emails and attachments to find matching SKUs and quantities, making this a time-consuming and error-prone process.

Previous Attempts & Challenges

Before engaging with Myko AI, the company had attempted multiple solutions:

Consulting Firm Approach – A consulting firm was hired for tens of millions of dollars but failed to deliver a functional solution.

Internal AI Initiative – The company built an internal solution using OpenAI’s Assistant API with their SKU database loaded into a code interpreter.

The system used the latest AI model available, supplemented with predefined instructions.

Accuracy was only 44%, making it impractical for deployment.

At this point, the company approached us for help.

Our Approach

We recognized that this problem fit within the set of challenges our AI technology is designed to solve – extracting actionable data from raw, unstructured inputs. The expected output for each processed order was a structured JSON format:

{"sku": "abcd1234", "quantity": 20}

Step 1: Understanding the Inputs & Expected Outputs

We collected raw sample emails and attachments along with their correct SKU and quantity mappings to establish a benchmark dataset.

Since the company had already developed an internal system, we gathered existing instructions and processing rules to bootstrap our AI models.

Step 2: Initial AI Model Deployment

We built our first AI solution using multiple models designed to extract relevant product information from emails and attachments.

Initial accuracy: 70% – a significant improvement but not yet production-ready.

Based on this, the company agreed to deploy our AI in a QA/Sandbox environment.

Step 3: Testing in Sandbox & Identifying Key Error Sources

In the sandbox, our AI processed real production emails, but its outputs were logged separately for validation without affecting live operations.

Challenge 1: PDF & Image Parsing Issues

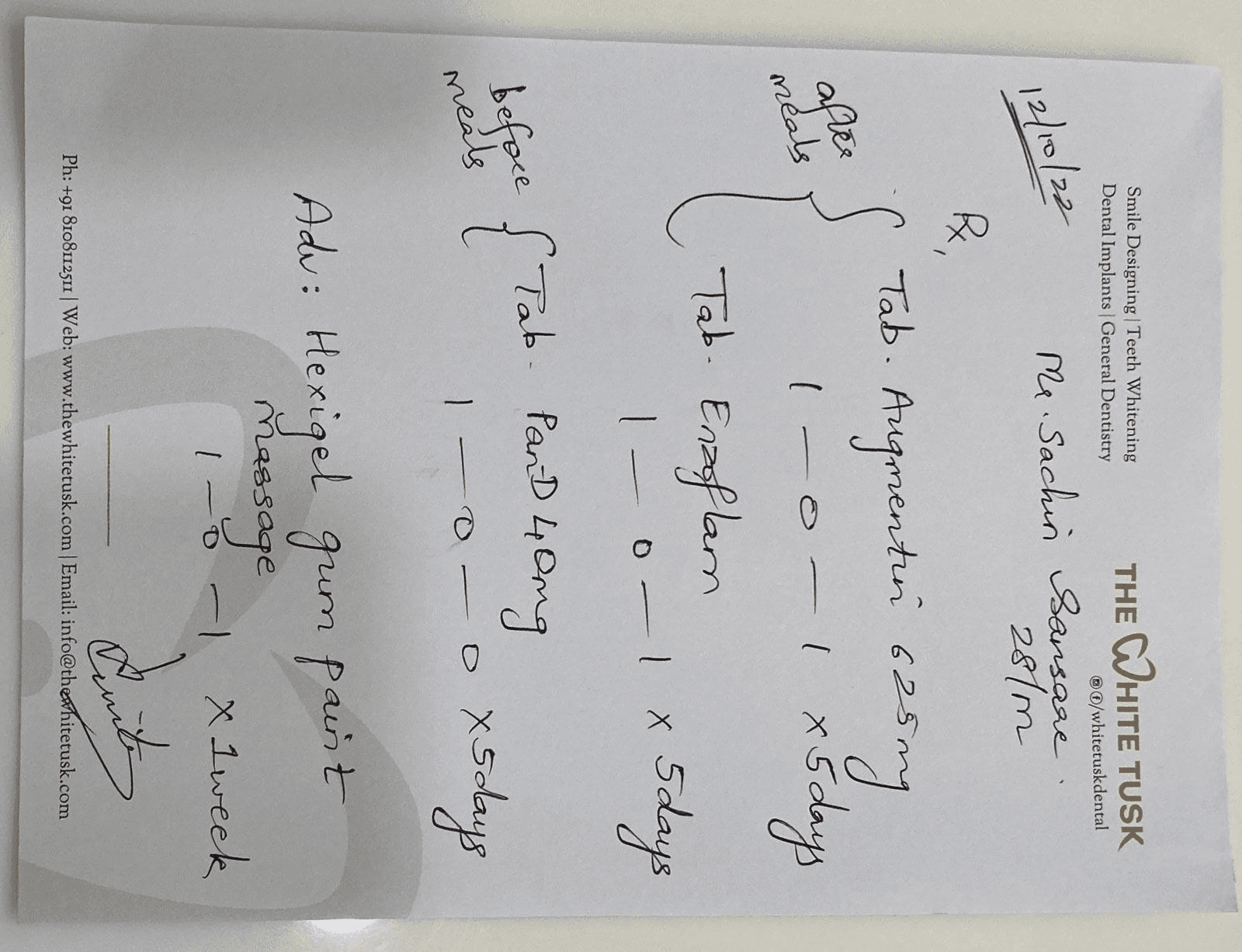

(not a real image from our customer but one to illustrate)

Scanned faxes, handwritten notes, and promotional flyers were not parsed accurately.

Even state-of-the-art solutions from leading tech firms struggle with these challenges.

Our solution: We built a custom LLM model tailored for document parsing. By training on real production data, we achieved near 100% accuracy in extracting product details from PDFs and images.

Challenge 2: Uncovering Hidden Business Logic

AI misclassifications revealed critical undocumented knowledge that CS agents had been applying manually:

Regional SKU Rules – Some SKUs were flagged as correct matches, but later rejected because they were not used in certain regions. Instead, specific packaging variations required different SKUs, known only through word-of-mouth within the CS team.

Pack Size Mismatches – Some SKUs officially represented single-unit packaging, but customers assumed packs contained three units. Orders had to be multiplied by a factor of 3, but this was undocumented.

Undocumented Quantity Multipliers – Certain SKUs had hidden unit size factors, meaning quantities needed adjustment.

Acronym vs. Full Product Name Confusion – The SKU database used acronyms, while customer orders contained full product names, leading to matching errors.

Step 4: Continuous Improvement Through Feedback Loop

Every misclassification was analyzed to extract the underlying business logic.

Our AI absorbed these rules and adjustments iteratively, refining its ability to correctly match SKUs.

These insights formed a semantic layer that embedded real-world business logic into the AI’s decision-making process.

Step 5: Final Deployment & Impact

Through continuous reinforcement learning and business logic integration, our AI reached 95% accuracy – a game-changing improvement from the initial 44% benchmark of previous solutions.

CS agents saved an average of 2 minutes per email.

With millions of emails processed per year, this translated into significant time and cost savings for the company.

Final Thoughts: AI is Not Fully Autonomous – Yet

Like self-driving cars, AI for email-to-order processing is not yet "Level 5" autonomy.

LLMs still face challenges such as hallucination and imperfect instruction-following.

However, by leveraging a strong feedback loop and an AI-consumable semantic layer, we maximize accuracy while keeping humans in the loop where needed.

Conclusion

This project demonstrated that AI alone cannot solve complex enterprise workflows without integrating business logic. The success of our solution was not just in improving accuracy but in systematically surfacing and structuring hidden business knowledge.

For enterprises dealing with high-volume, unstructured data, this case proves that AI – when combined with iterative learning and domain-specific logic extraction – can deliver real, measurable impact.