May 13, 2025

Guaranteed AI Accuracy in Enterprise Workflows: Bridging Raw Data with Business Logic

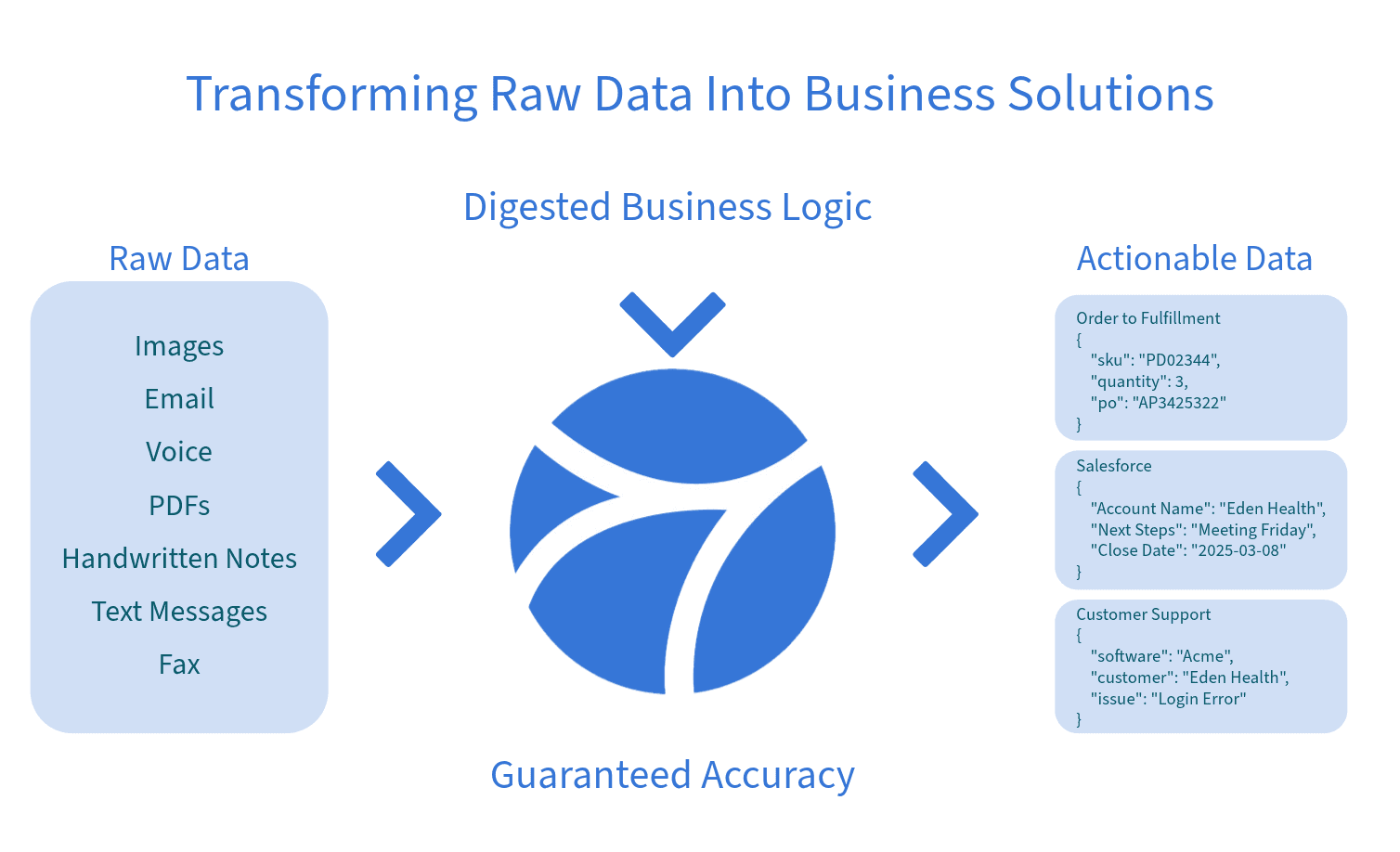

AI Agents are able to transform unstructured data into business logic and structured fields within existing software, saving time from administrative workflows.

Introduction

Enterprise AI struggles with raw, unstructured data, which makes up 80-90% of enterprise information [1]. Without clear business logic, AI models misinterpret context, leading to errors [2]. Myko solves this by integrating business rules directly into AI workflows, ensuring accurate data interpretation and decision-making.

Myko addresses this problem by taking a business logic-first approach to AI in enterprise workflows. Instead of relying on AI alone, Myko’s solution iteratively extracts and formalizes the hidden business logic that companies use when handling data. By mapping raw data to the company’s specific rules and processes, Myko ensures that AI agents operate within the correct context. The result is an AI-driven workflow that can turn messy inputs into actionable outputs with guaranteed accuracy, combining the efficiency of automation with the reliability of human expertise.

Challenges in Processing Raw Data with AI in Enterprise Workflows

AI alone lacks awareness of a company’s unique processes, making raw data automation challenging. Key obstacles include:

Emails to Orders: One of the most common enterprise workflows that AI aims to automate is extracting structured product orders from unstructured email conversations. On the surface, this seems like a straightforward task – AI reads an email, identifies the products mentioned, finds the corresponding SKUs in a database, and generates a structured order. However, in practice, this process is fraught with hidden business logic that AI models cannot infer without explicit human feedback.

Example 1: Regional SKU Rules – An AI correctly matched a SKU, but the customer used a different regional variant due to packaging differences—an undocumented rule known only by the team.

Example 2: Implicit Unit Conversions – Customers mistakenly assumed a SKU contained three items instead of one, causing order errors. This undocumented rule only surfaced via feedback.

Customer support logging: Converting spoken support transcripts into CRM entries is another challenge. Summarizing the transcript is easy, but categorizing and logging that information correctly in a CRM system requires more than transcription – it demands understanding the company’s workflow rules. Without guidance, an AI might produce a verbatim transcript and not know which parts are the “important bits” to record as structured data (e.g. creating a new case, identifying a specific software issue). For instance, an AI might struggle to find the specific product the customer is referring to so the information never makes it back to the relevant product team. Only with business logic that combines codified rules with implicit rules used in an organization (in this case, rules for interpreting common sales phrases) can the AI correctly log the outcome. The lack of clear rules means critical details could be omitted or misfiled. This customer support scenario underscores that context and business rules (like how to identify an opportunity update vs. a general remark) are needed for the AI to perform as well as a human assistant.

These examples highlight a broader issue: much of the essential knowledge for processing such data is unwritten, ambiguous, or inaccessible to AI. Often, the rules for handling an email order or a customer call are not documented in any official manual – they live in the minds of experienced employees or in tribal team conventions. As one industry expert observed, a company’s most valuable knowledge “isn’t written down in any manual… It exists in the intuitive understanding of [its] best employees”. This kind of “invisible” expertise (tacit knowledge) has historically been impossible to capture and scale in software [3]. Consequently, when an AI system is deployed without that hidden logic, it ends up guessing or hallucinating rules, resulting in inconsistent and inaccurate outcomes. The challenge for enterprises is clear: to make AI work reliably on raw data, they must first surface and define the business logic that today resides informally in people’s heads or scattered documents.

Myko iteratively extracts and integrates business logic into AI workflows, ensuring AI decisions align with real-world company rules rather than operating as a black box.

This process begins with Myko working closely with the customer to understand how their team currently processes raw data. Myko combines proprietary AI with human review to understand the manual workflow, and identify all the implicit rules being applied. For example, to uncover that “if an email order mentions expedited shipping, use courier X and add a 15% fee” or “if a voice call contains a complaint keyword, flag it as a high-priority support ticket.” These kinds of rules are often not documented until someone pulls them out through careful interviews and analysis. Myko’s team collects these insights in a structured form – effectively building a custom knowledge base of business logic for the AI.

Crucially, Myko’s approach is iterative and incremental. It’s understood that not all business logic will be discovered in one go; some only reveals itself when exceptions occur or when the AI makes an error and a human corrects it. Therefore, Myko employs a cycle of deploying the AI in a controlled setting, observing its outputs, and continuously asking for feedback. Each iteration allows the AI to learn from mistakes and edge cases, progressively refining its understanding of the task. This “learning loop” ensures that the AI system evolves with a deepening grasp of the enterprise’s unique data and rules. As noted in one overview of human-in-the-loop AI, continuous human involvement enables ongoing adjustments and improvements, allowing the AI to handle complex or ambiguous situations that purely automated systems would miss [4]. In practice, this means Myko will update the AI’s instructions whenever a new scenario is encountered – for instance, if a customer uses a new shorthand in an email or a new product code appears, that knowledge is added to the logic repository.

By capturing hidden business logic and feeding it into the AI, Myko creates a sort of semantic layer custom-tailored to the enterprise. This layer sits between the raw data and the AI’s decisions, so the AI is never operating just on raw text or audio alone – it’s always consulting the business logic rules that apply. Over time, as this knowledge base grows, the AI’s accuracy improves dramatically. What started as an AI with a generic understanding becomes an AI that “speaks the language of the business.” This methodical extraction of tacit knowledge bridges the gap between what employees intuitively know and what the AI formally understands. The result is an AI solution that mirrors the decision-making of the best human experts at the company, but with the speed and scale of automation.

How Myko Guarantees Accuracy

Myko ensures accuracy through benchmarking, testing, and iterative refinement. Before deployment, the AI is rigorously validated and incorporates all necessary business logic. The key steps in this process are:

Building the Benchmark – Myko begins by working with the customer to define the expected output for a variety of sample inputs. This means creating a benchmark dataset or set of examples that illustrate how raw data should be mapped to actionable data in the target system. For instance, if the task is processing email orders, Myko and the client will compile a collection of real email examples and the correct extracted order details for each. This serves as the “source of truth” for what the AI must achieve. By establishing upfront what a correct outcome looks like, both Myko and the client have a clear mapping of raw-to-structured data to aim for.

Initial Instructions – Next, Myko captures the detailed business rules and manual steps that humans currently use to perform the task. This often involves documenting the process with the help of subject-matter experts at the company. If employees follow certain decision trees or if-then rules (even informally), those are written down. For example, how does a support agent handle an ambiguous line item in an email? By recording these initial instructions, Myko creates a starting rulebook for the AI agent. This step essentially transfers tribal knowledge from individuals into a formalized instruction set that the AI can be built around.

AI Model Development – With the benchmark examples and initial rulebook in hand, Myko develops an AI solution tailored to the task. This could involve prompt-engineering, training or fine-tuning multiple large language models (LLM) and embedding the business logic constraints into its decision-making. The AI is taught to replicate how a human would apply the business rules to the raw data. At this stage, Myko will test the AI on the benchmark dataset to gauge its initial accuracy. For instance, the AI might correctly map 70% of the sample emails to orders on the first try, and the errors are analyzed to see which rules or interpretations were missed. Myko provides these accuracy estimates to the customer transparently, so there is a baseline understanding of performance before any real-world deployment.

Testing in Sandbox – Once the AI model shows promising accuracy in development, Myko deploys it in a sandbox environment for real-world evaluation. The sandbox is a safe testing ground – often a staging version of the customer’s software or a parallel workflow – where the AI can process actual live data without impacting production systems. During this phase, the AI might process incoming emails or voice logs in real time and produce outputs, but those outputs are reviewed before any action is taken on them. The goal here is to see how the model performs with real variability and unseen cases, under conditions that closely mimic production. This testing can reveal new corner cases or subtle logic gaps that weren’t apparent in the initial samples. Because it’s a sandbox, any mistakes the AI makes carry no business risk, but they provide crucial insights for the next refinement step.

Feedback Loop – As the AI operates in the sandbox, Myko and the client team establish a tight feedback loop. Every AI-generated output is validated – either by Myko’s experts, the client’s team, or both. When the AI gets something wrong or expresses uncertainty, that feedback is fed directly into improving the system. For example, if the AI failed to parse a particularly tricky email format, Myko will add that format (and how to handle it) to the instructions and retrain or adjust the model. This human-in-the-loop approach is instrumental in boosting accuracy: human feedback helps correct errors and fine-tune AI models, leading to more reliable outcomes [4]. The feedback loop might also surface hidden knowledge that wasn’t captured initially – perhaps an employee remembers a rare scenario or a new business rule comes up. Myko incorporates all of this into the next iteration. The continuous review and correction ensure that the AI’s errors approach zero before it goes live. Each cycle of feedback makes the AI smarter, essentially teaching it to handle cases that even the initial rulebook may have missed.

Iterative Improvement – Myko repeats the develop → test → feedback cycle as many times as needed to reach the target accuracy. After each iteration, the improvement is measured: for instance, accuracy might rise from 85% to 95% after a few feedback cycles, and then to 99% after a few more targeted fixes. These gains are tracked and shared, so the enterprise can see the progress in quantifiable terms. This iterative improvement is a disciplined process – the AI is only considered ready when it consistently meets or exceeds the pre-agreed accuracy benchmark (which could be, say, 98%+ correct mappings) in the sandbox. Throughout this process, Myko is effectively capturing more business logic (in the form of new rules or clarifications) and reducing uncertainty in the AI’s performance. The iterative approach also builds trust: stakeholders can witness the AI improving over time, and they contribute to its learning, so by the end of this phase there is confidence that the AI will perform as expected in production.

Production Deployment & Guarantee – Typically Myko is able to go from signed contract to deployment in a matter of weeks rather than months. After rigorous testing and refinement, Myko deploys the AI agent into the production environment – the live enterprise workflow – with a guarantee of its accuracy. At this stage, the AI takes over the heavy lifting of processing raw data into the system, and because of all the embedded business logic, it does so with a very high degree of certainty. Myko is confident enough to guarantee the accuracy of the mappings;. For the enterprise, it means they can trust the AI output – any edge case that can’t be confidently handled will be flagged for human review rather than mistakenly processed. The result is a smooth deployment where the AI delivers immediate value (speed and efficiency gains) without the usual period of doubt about whether it’s getting things right. By the time of full rollout, all parties know the AI’s capabilities and limits, and the system has essentially been “certified” by the benchmark tests and iterative improvements. Myko’s guarantee provides peace of mind that accuracy will remain high, and if any new scenario arises in production, the same iterative logic extraction process will kick in to address it. In short, the workflow is automated, but remains correct and dependable, because the AI is operating with the complete set of business logic it needs.

Through these steps, Myko ensures that accuracy is never left to chance. Each phase reinforces the AI with more knowledge or validation, bridging the gap between raw data and the actionable, error-free output that enterprises require. This comprehensive process is what allows Myko to confidently stand behind the promise of “guaranteed accuracy” in converting unstructured data to structured outcomes.

Conclusion

Myko bridges the gap between raw data and actionable insights by embedding business logic into AI, turning unstructured data challenges into strengths. The solution described in this whitepaper addresses the root causes of AI inaccuracies in enterprise settings: it brings the “invisible” operational knowledge of a business to the surface and into the AI’s decision-making process. In doing so, Myko enables organizations to finally trust AI agents with mission-critical processes that were previously too error-prone to automate.

The value proposition for enterprises is clear. With Myko, companies can achieve the efficiency and scale of AI-driven automation without sacrificing accuracy or compliance. Workflows like email order entry or customer support logging, once bogged down by manual effort or unreliable algorithms, can now run seamlessly. Employees are freed from tedious data processing tasks and can focus on higher-value work, knowing that the AI will handle the routine correctly. Moreover, Myko’s guaranteed accuracy model de-risks AI adoption – stakeholders have measurable proof of performance and a safety net that the system will do the right thing (or not act until it’s sure). This assurance is especially important for decision-makers who are accountable for results and wary of AI hype. By delivering verifiable accuracy improvements and sharing the logic behind AI decisions, Myko builds trust in AI as a dependable co-worker rather than a mysterious black box.

In summary, Myko’s business logic-centric method transforms how enterprises can leverage AI. It demonstrates that when AI is combined with a structured understanding of business processes, it can reliably convert raw, unstructured data into the lifeblood of enterprise systems – be it orders, CRM records, or any actionable data – with speed and precision. For enterprise leaders looking to improve AI-driven workflows, the takeaway is to insist on accuracy and transparency. Myko offers a pathway to achieve both, by ensuring that the AI learns your business as thoroughly as your best employee and delivers results you can count on.

Want to see how Myko can deliver immediate value for your enterprise teams?

Book a demo with Myko here.

References

[1] MIT Sloan. Tapping the Power of Unstructured Data

[2] Why Enterprise AI Fails Without a Semantic Layer

[3] Why AI might finally break Polanyi's Paradox

[4] Human in the Loop AI: Keeping AI Aligned with Human Values